Translation, reliability, and validity of Japanese version of the Respiratory Distress Observation Scale | PLOS ONE

Assessing the accuracy of species distribution models: prevalence, kappa and the true skill statistic (TSS) - ALLOUCHE - 2006 - Journal of Applied Ecology - Wiley Online Library

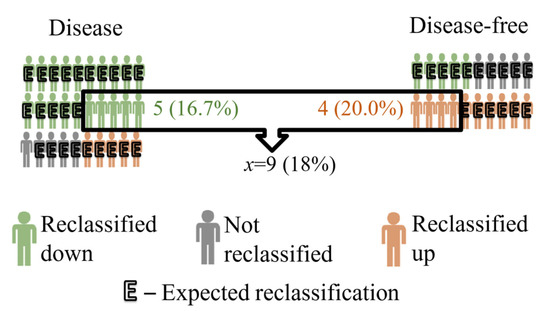

IJERPH | Free Full-Text | Cohen’s Kappa Coefficient as a Measure to Assess Classification Improvement following the Addition of a New Marker to a Regression Model

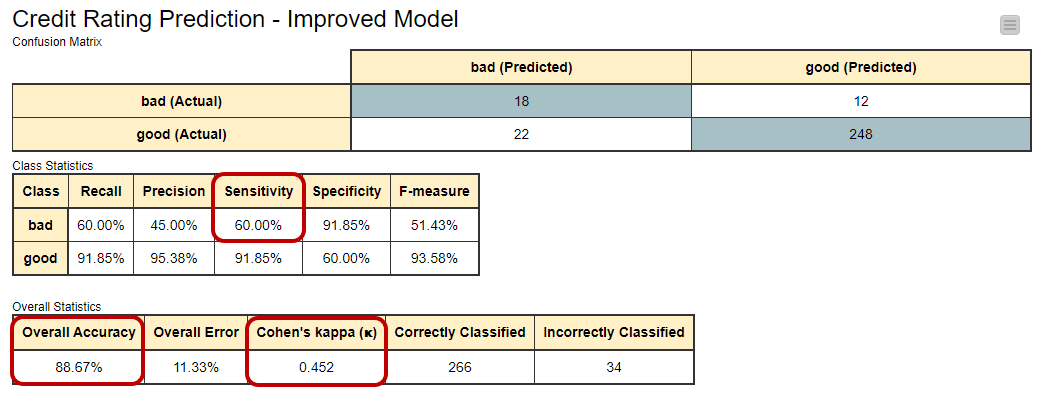

How to Calculate Precision, Recall, F1, and More for Deep Learning Models - MachineLearningMastery.com

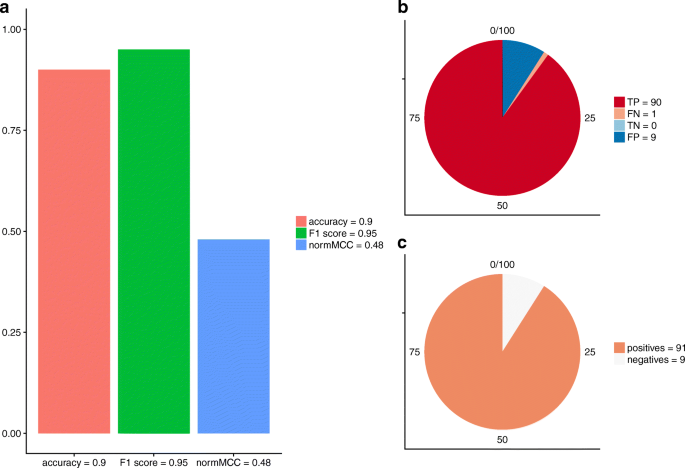

The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation | BMC Genomics | Full Text

The area under the precision‐recall curve as a performance metric for rare binary events - Sofaer - 2019 - Methods in Ecology and Evolution - Wiley Online Library

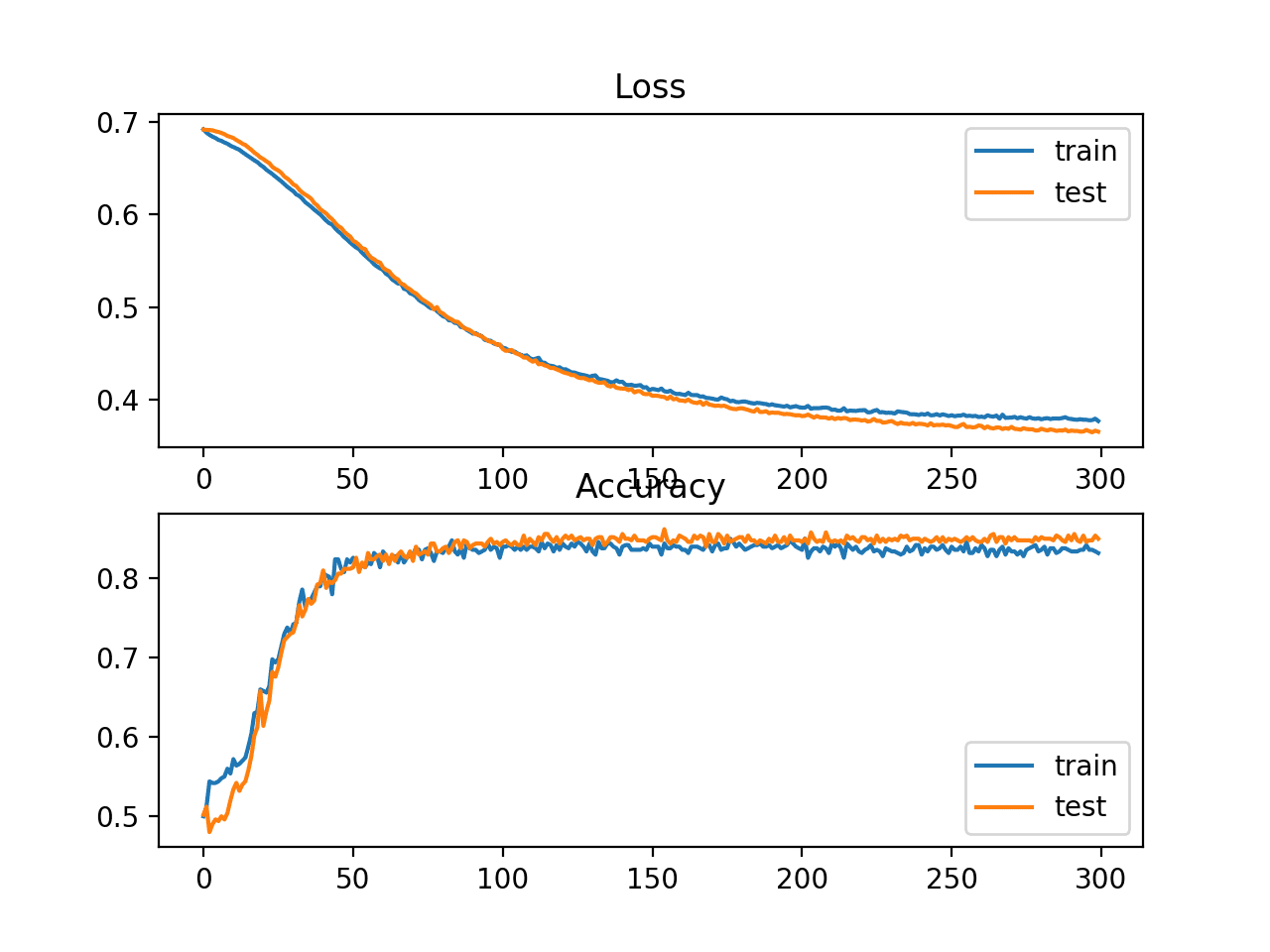

Using machine learning to understand age and gender classification based on infant temperament | PLOS ONE